Initializing Studio...

Langtrain

Backed by World-Class

AI Infrastructure.

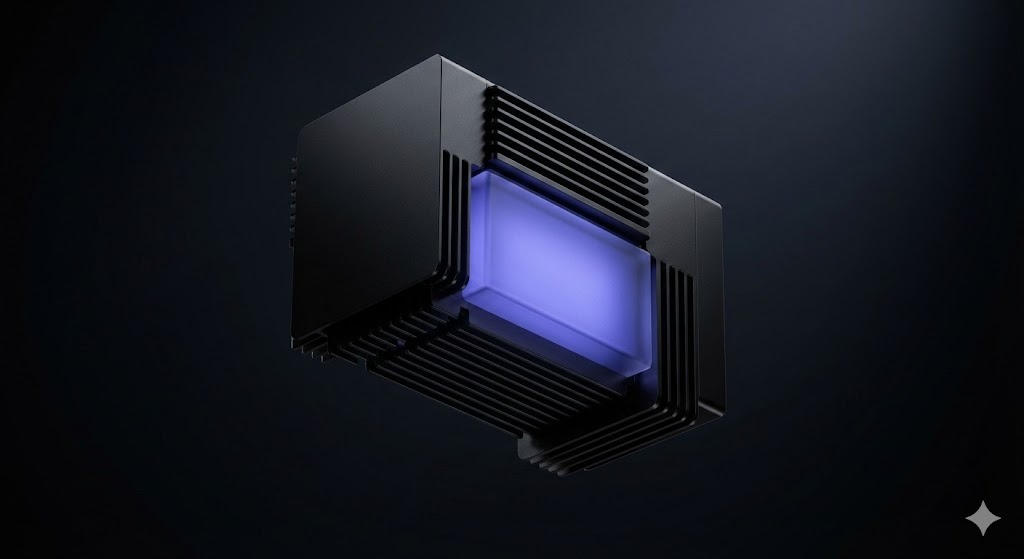

INFRASTRUCTURE

FOR INTELLIGENCE

A complete toolchain for training, evaluating, and deploying custom models. Built for engineers who need control, not black boxes.

Fine-Tuning Engine

Full-parameter and LoRA training on your own infrastructure. Control every hyperparameter or let our auto-scheduler optimize.

Explore EngineEvaluation Suite

Run automated benchmark suites against every checkpoint to ensure regression-free deployment.

View BenchmarksGlobal Mesh

One-click deployment to a global edge network. Your models are replicated instantly to 35+ regions.

View NetworkInfrastructure

Without Compromise.

Deploy text-generation models on your own visual computing cloud. Zero egress. Infinite scale.

Human in the Loop

Private Vault

Local Compute

Global Neural Network

Stop renting

intelligence.

Generic APIs are great for prototypes. Langtrain is for production. Own the weights, own the future.

Latency

Local inference eliminates network round-trips. Your model runs right next to your application logic.

Privacy

Zero data leakage. Training and inference happen on your infrastructure, never leaving your VPC.

Cost

Stop paying rent on intelligence. Train once, run forever. No per-token tax eating your margins.

Reliability

No dependency on OpenAI's status page. You own the model, you own the uptime.

Deploy Your SLM Everywhere

A specialized model is only as powerful as the tools it can access. Langtrain connects your SLM to your entire ecosystem—from proprietary databases to custom Slack agents.

Data Sources

Built-in Connector

Built-in Connector

Built-in Connector

Deployment

Built-in Connector

Built-in Connector

Built-in Connector

Tools

Built-in Connector

Built-in Connector

Built-in Connector